Athina AI

Monitor LLMs and Detect Hallucinations in Production In today's rapidly evolving landscape of artificial intelligence, monitoring large language models (LLMs) is crucial for ensuring their reliability and accuracy. Detecting hallucinations—instances where the model generates incorrect or nonsensical information—is a key aspect of maintaining the integrity of AI applications. To effectively monitor LLMs in production, consider implementing the following strategies: 1. **Regular Performance Audits**: Conduct routine evaluations of the model's outputs to identify patterns of hallucination. This can help in understanding the contexts in which inaccuracies are more likely to occur. 2. **User Feedback Mechanisms**: Encourage users to report any inconsistencies or errors they encounter. This feedback can provide valuable insights into the model's performance and areas for improvement. 3. **Automated Monitoring Tools**: Utilize advanced monitoring tools that can automatically flag unusual outputs or deviations from expected behavior. These tools can help in real-time detection of hallucinations. 4. **Data Quality Checks**: Ensure that the training data used for the LLM is of high quality and representative of the intended use cases. Poor data quality can lead to increased hallucinations. 5. **Continuous Learning**: Implement a feedback loop where the model is regularly updated based on new data and user interactions. This can help in reducing the frequency of hallucinations over time. By focusing on these strategies, organizations can enhance the reliability of LLMs in production environments, ensuring that they deliver accurate and meaningful outputs while minimizing the risk of hallucinations.

AI Project Details

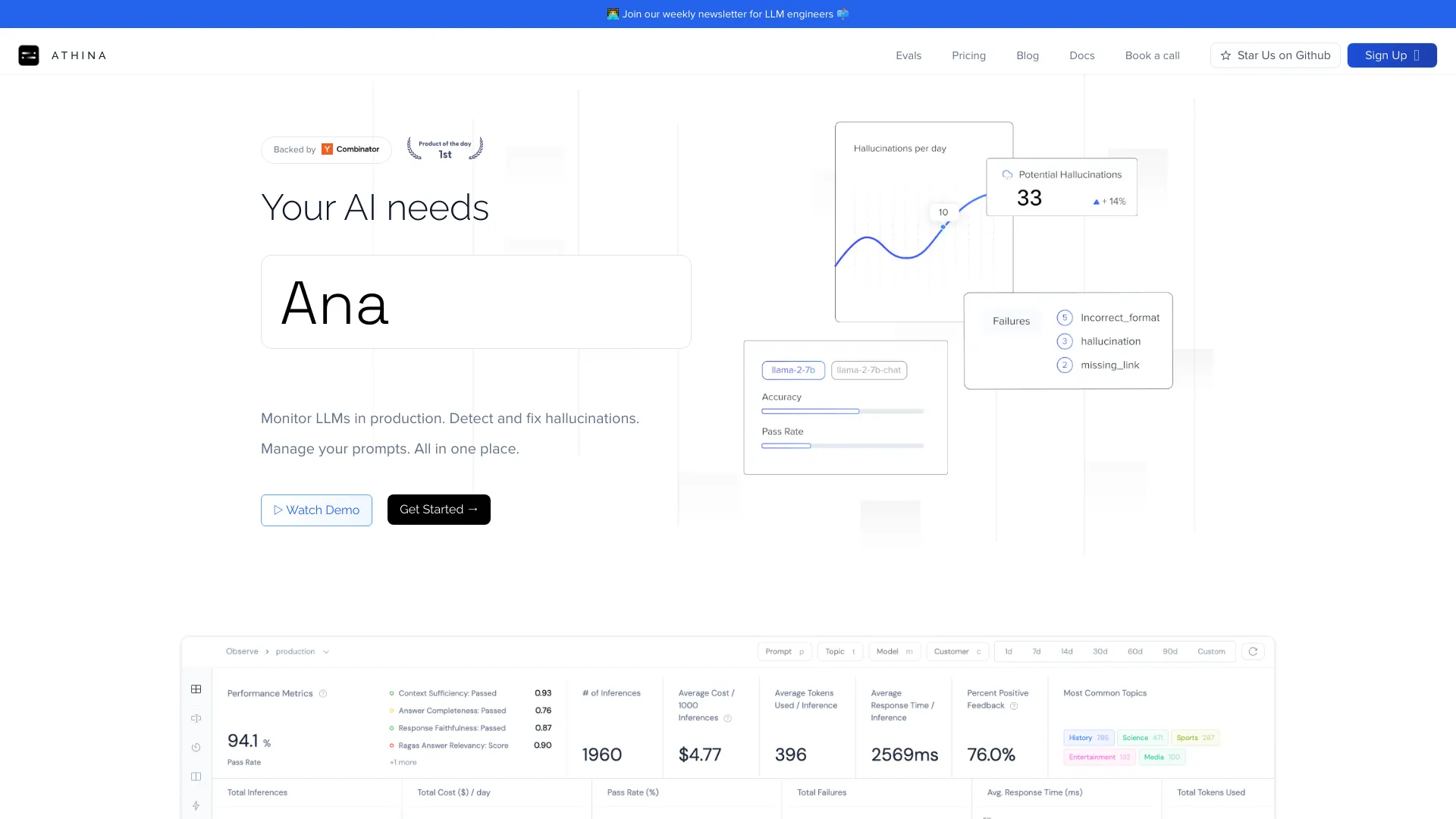

What is Athina AI?

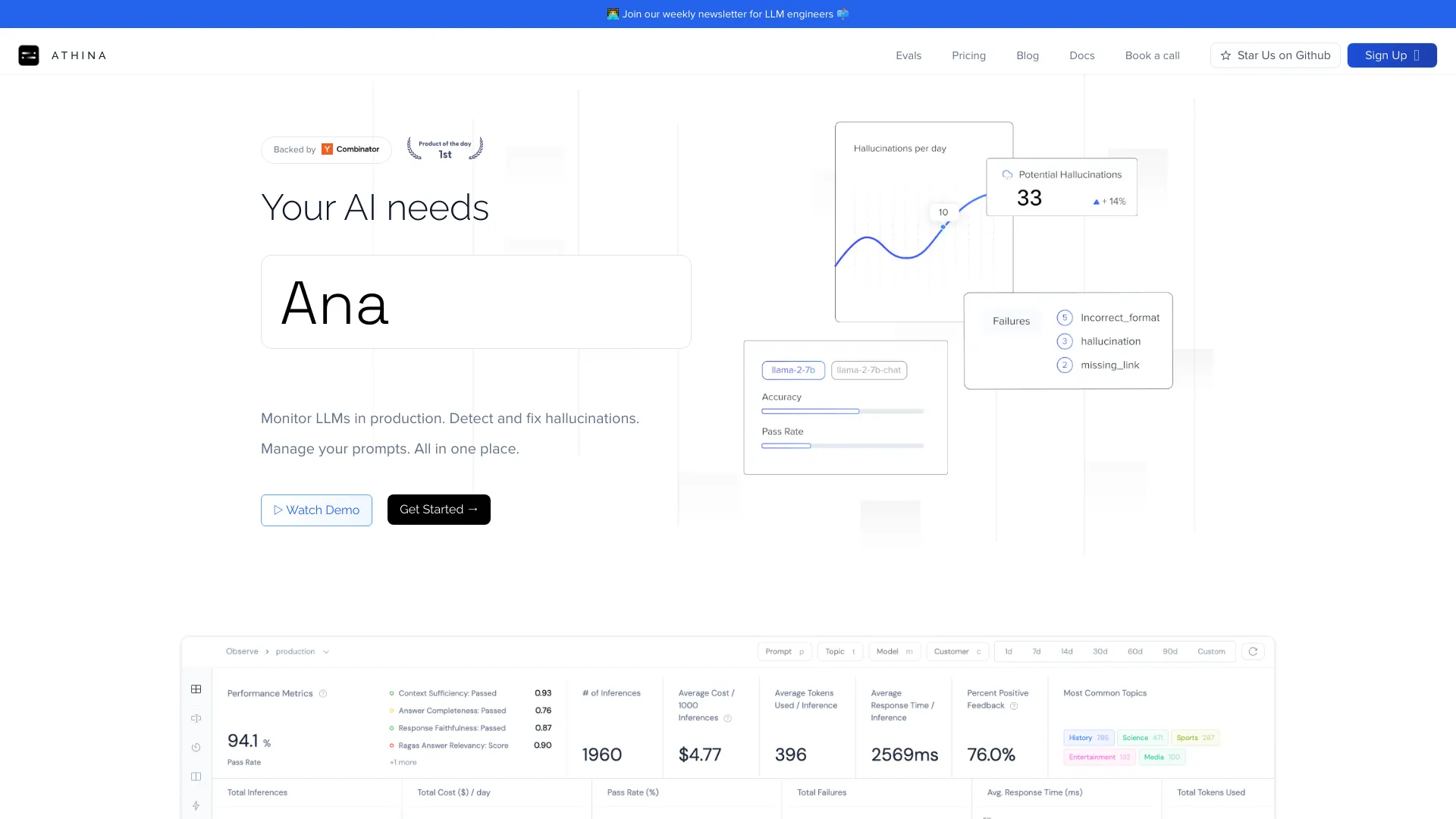

Athina helps developers monitor and evaluate their LLMs applications in production.

How to use Athina AI?

Set up monitoring and start running evaluations today.

Athina AI's Core Features

- Complete visibility into RAG pipeline

- 40+ preset eval metrics to detect hallucinations and measure performance

Athina AI's Use Cases

- Monitor LLMs in production

- Detect and fix hallucinations

- Manage prompts

FAQ from Athina AI

How long does it take to integrate Athina AI into my application?

[Your answer here]

Is Athina AI compatible with all LLMs?

[Your answer here]

Can I host Athina AI on my own infrastructure?

[Your answer here]

Athina AI Support

For support, please contact us via email or visit our contact us page.

Athina AI Company

Athina AI Company name: Athina AI.

Athina AI Pricing

Athina AI Pricing Link: https://athina.ai/#pricing

Athina AI LinkedIn

Athina AI LinkedIn Link: https://www.linkedin.com/company/athina-ai

Athina AI GitHub

Athina AI GitHub Link: https://github.com/athina-ai/athina-evals?utm_source=navbar&utm_medium=website