Vellum

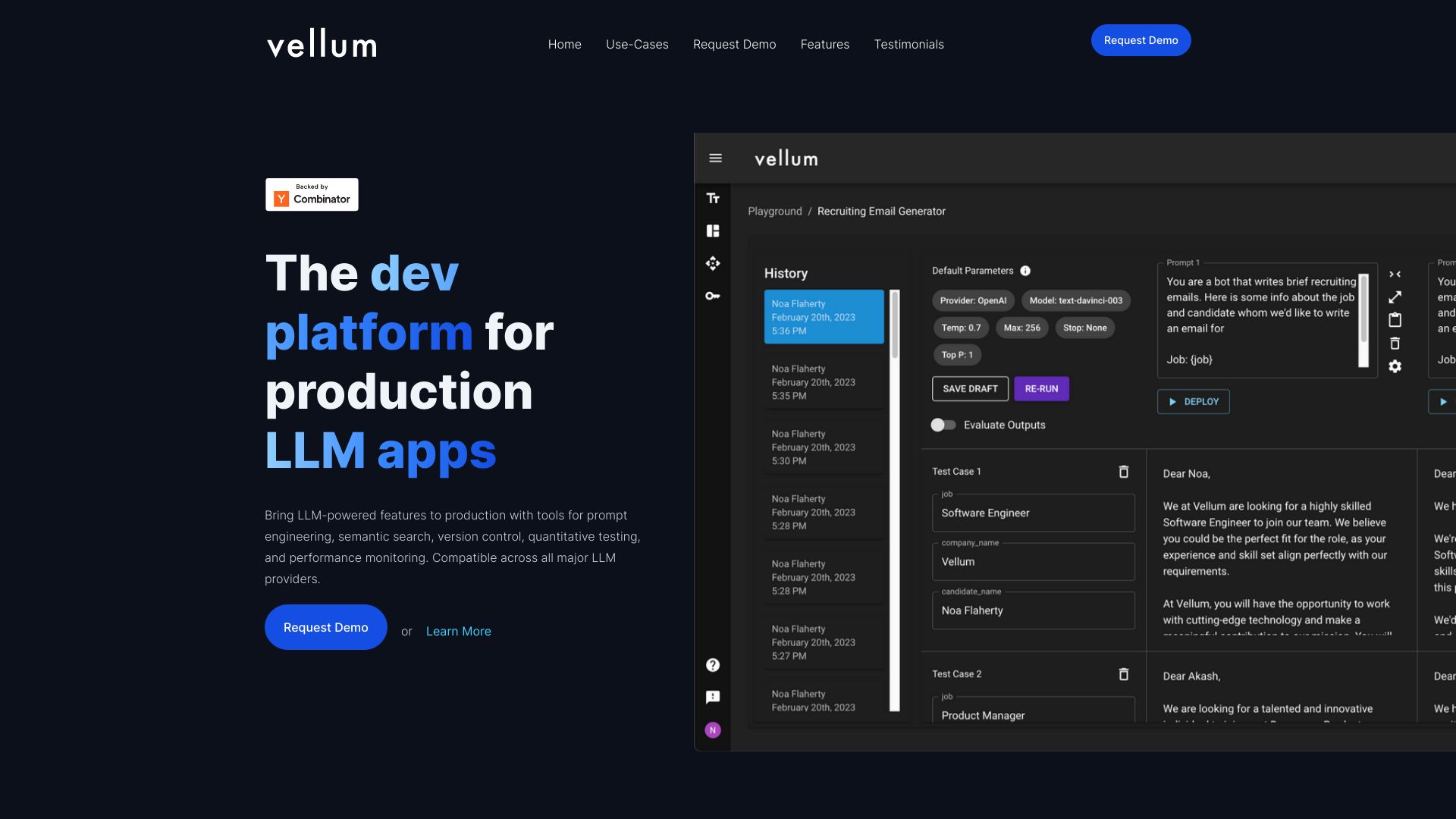

Development Platform for LLM Apps: Unlocking the Future of AI In today's rapidly evolving technological landscape, the development platform for LLM (Large Language Model) applications is crucial for businesses looking to harness the power of artificial intelligence. This platform provides developers with the tools and resources needed to create innovative applications that leverage LLM capabilities. Key Features of the Development Platform: User-Friendly Interface: The platform offers an intuitive interface that simplifies the development process, allowing developers to focus on building robust applications without getting bogged down by complex technicalities. Comprehensive API Access: With extensive API support, developers can easily integrate LLM functionalities into their applications, enhancing user experience and engagement. Scalability: The platform is designed to scale with your needs, accommodating everything from small projects to large enterprise solutions, ensuring that your applications can grow alongside your business. Rich Documentation and Support: Access to detailed documentation and a supportive community helps developers troubleshoot issues and optimize their applications effectively. Security and Compliance: The platform prioritizes security, ensuring that all applications built on it adhere to industry standards and regulations, protecting user data and privacy. By utilizing this development platform for LLM apps, businesses can create powerful, intelligent applications that meet user demands and drive engagement. Embrace the future of AI development and unlock new possibilities today!

Category:code-it ai-app-builder

Create At:2024-12-15

Vellum AI Project Details

What is Vellum?

Vellum is the development platform for building LLM apps with tools for prompt engineering, semantic search, version control, testing, and monitoring. Compatible with all major LLM providers.

How to use Vellum?

Vellum provides a comprehensive set of tools and features for prompt engineering, semantic search, version control, testing, and monitoring. Users can use Vellum to build LLM-powered applications and bring LLM-powered features to production. The platform supports rapid experimentation, regression testing, version control, and observability & monitoring. It also allows users to utilize proprietary data as context in LLM calls, compare and collaborate on prompts and models, and test, version, and monitor LLM changes in production. Vellum is compatible with all major LLM providers and offers a user-friendly UI.